AI Metering and Loudness: How Smart Tools Are Changing the Way We Mix

Making a Scene – AI Metering and Loudness: How Smart Tools Are Changing the Way We Mix

Introduction: Why Loudness Matters More Than You Think

If you’ve ever turned on your favorite playlist and noticed that one song blasts out of your speakers while another sounds much softer, you’ve experienced the problem of loudness. For decades, musicians, producers, and engineers have been caught up in what many people call the “loudness wars.” Everyone wanted their track to sound bigger, bolder, and more powerful than the one that came before it. But in the modern streaming world, that fight doesn’t really matter the way it used to. Platforms like Spotify, Apple Music, YouTube, and Tidal have built systems to normalize, or even out, the volume of every track that gets uploaded.

And now, artificial intelligence (AI) has stepped in as a major player. Instead of spending endless hours checking meters, re-exporting mixes, or worrying whether your song will get turned down by a digital service provider (DSP), AI metering and loudness tools can handle the heavy lifting for you. Let’s take a deep dive into how we got here, what LUFS means, why normalization exists, and how AI can save your music from sounding either too weak or too aggressive.

The Loudness Wars: A Quick History Lesson

To understand why streaming services normalize audio, you need to know a little bit about the loudness wars. Back in the days of vinyl and early radio, engineers already understood that people liked music that sounded “full” and “present.” But there were physical limits. Vinyl records couldn’t handle extreme loudness without distortion, and radio stations often applied their own compression to keep everything smooth.

When CDs came along in the 1980s, the game changed. Suddenly, engineers had more headroom to push mixes louder. And they did. By the 1990s and 2000s, it was common for songs to be heavily compressed and maximized just to make them sound louder than competing tracks. This was called the loudness war, and it led to mixes that were often crushed, with little room for dynamics.

The problem was that loudness is relative. Your track might sound loud compared to the next one, but if the listener turns down the volume knob, the advantage disappears. What stays behind is distortion, ear fatigue, and less musicality. That’s where LUFS and normalization came into play.

What Exactly Is LUFS?

LUFS stands for Loudness Units relative to Full Scale. It’s a way of measuring loudness that lines up with how our ears actually hear sound. Unlike peak meters, which show the highest level a waveform reaches, LUFS accounts for both volume and time. It measures how loud a track feels, not just how tall the waveform looks on a screen.

There are three main types of LUFS readings. Integrated LUFS measures the loudness of an entire song from start to finish. Short-term LUFS looks at loudness in short windows, usually three seconds, which is useful for catching loud moments or quiet parts. Momentary LUFS is even quicker, tracking half-second bursts.

Why does this matter? Because streaming platforms set their own LUFS targets. Spotify, for example, often normalizes music to around -14 LUFS Integrated. Apple Music hovers around -16. YouTube sits around -13. This means if you submit a track mastered at -8 LUFS, which was once standard in the loudness war era, the platform will automatically turn it down. On the flip side, if you submit something mastered too quietly, the platform may boost it, potentially bringing up noise or hiss in the process.

Why Streaming Services Normalize Audio

Streaming platforms want a consistent listening experience. Imagine a playlist where one track is blasting at full volume and the next is a gentle ballad that you can barely hear. That inconsistency drives listeners crazy. To solve this, platforms normalize playback so everything feels balanced.

Normalization also helps protect listeners’ ears. Loud tracks can cause fatigue or even damage over time, especially if someone is using headphones. By keeping everything within a safe loudness range, streaming companies reduce the risk of complaints and provide a smoother experience.

Another key reason is fairness. In the loudness war days, the loudest songs seemed to stand out more, even if they weren’t better mixes. Normalization removes that competitive edge. Now, songs with good dynamics, space, and musical feel can shine just as much as aggressive, brick-walled masters.

Too Loud vs. Just Right: The Problem of Rejection

If you send a track to a DSP that’s way louder than the standard, it doesn’t get rejected outright most of the time—but it does get altered. The service will lower the gain automatically. This can lead to problems. For example, a song mastered at -7 LUFS might sound huge in your DAW, but when Spotify turns it down to -14 LUFS, it can actually sound weaker than a track mastered properly at -14 LUFS. That’s because the over-compression flattens all the dynamics, leaving no punch once the volume is reduced.

In some cases, particularly with YouTube or other content platforms, overly distorted or clipped tracks can actually get flagged or rejected. Even if your music isn’t technically banned, the way it plays back may disappoint you. That’s why engineers today focus less on loudness and more on balance. And this is exactly where AI tools come in.

How AI Metering Works

AI metering tools use machine learning and smart algorithms to analyze your track in real time. Instead of you staring at meters, second-guessing numbers, or checking reference tracks, the AI can instantly compare your mix against known loudness standards.

Some AI systems, like iZotope’s Insight or Sonible’s smart:comp, look at LUFS levels and suggest adjustments. Others go further by automatically applying gain changes, EQ tweaks, or compression that keep your track within the target loudness range. A few even simulate how your track will sound across different platforms like Spotify, Apple Music, or Tidal so you know in advance how your mix will be affected.

This is powerful because it saves time, avoids guesswork, and ensures your music will translate well no matter where it’s played.

The Magic of AI Loudness Matching

Let’s say you finish mixing your new single. You run it through an AI loudness tool. Within seconds, the tool tells you your track is sitting at -9 LUFS. That might have been fine ten years ago, but today it’s going to get turned down by every major platform.

The AI recommends you adjust to -14 LUFS. With one click, it applies transparent limiting and re-balances your track so it still feels powerful but isn’t overly squashed. You export the corrected version and upload it. Now, when your fans stream it, it sounds just as strong as other songs in the playlist, without the dull, lifeless quality of an over-compressed file.

This automatic process avoids rejection, avoids distortion, and keeps your music competitive in a healthy way.

Comparing Too Loud vs. AI-Corrected Mixes

Imagine two versions of the same rock track. The first is mastered hot at -7 LUFS. The waveform looks like a solid block, with peaks chopped off and almost no dynamic range. In your DAW, it sounds punchy, but once Spotify turns it down, the track loses life. The drums feel flat, the vocals are harsh, and the entire song sounds smaller compared to other balanced mixes.

Now, take the AI-corrected version at -14 LUFS. The waveform has space. The quiet verses drop naturally, and the choruses hit hard without distorting. When played alongside other songs, it doesn’t get turned down. It feels alive, musical, and natural. Listeners don’t need to adjust their volume. They just enjoy the music as intended.

That difference is huge. And it shows why AI isn’t just a “cool new tool” but an essential part of modern mastering.

AI Tools You Can Try

Today there are several AI-powered metering and mastering tools that indie artists can use without hiring an expensive engineer. Services like LANDR (https://www.landr.com) and eMastered (https://emastered.com) use AI to master tracks, including loudness matching. iZotope’s Ozone (https://www.izotope.com/en/products/ozone.html) includes AI assistants that suggest mastering chains tailored to your mix. Sonible’s tools (https://www.sonible.com) provide real-time AI feedback for loudness, compression, and EQ.

Even DAWs like Logic Pro and Ableton are starting to add AI helpers that check your mixes for LUFS compliance before export. This means you can create professional, platform-ready masters from your bedroom studio without worrying about whether you’re hitting the right numbers.

Why AI Saves Time and Stress

Without AI, loudness metering can be a guessing game. You might bounce out three or four versions of your song, upload them to private playlists, and then play them back to hear how they sound. If one feels too soft, you go back and tweak the limiter. If another feels too harsh, you dial it down. This can eat up hours or even days.

With AI, you get feedback instantly. The software does the math for you and tells you exactly where your track stands. More importantly, it adjusts in a musical way, not just by crunching numbers. That means you spend less time worrying about meters and more time focusing on the creative side of making music.

Loudness and Artistic Freedom

One worry some artists have is that normalization and AI tools will take away creative control. After all, what if you want your track to be loud, aggressive, or raw? The truth is, loudness normalization doesn’t stop you from making bold choices. It just ensures your mix fits in with the real-world playback environment.

AI tools don’t erase your sound. They help you get the most out of it. If your vision is a crunchy, overdriven punk track, AI can make sure it stays true while still hitting the right LUFS level. If your song is a delicate acoustic ballad, AI can keep it dynamic without being buried in a playlist. In other words, AI acts as a guide, not a dictator.

The Future of AI Metering

As AI continues to evolve, we’re likely to see even smarter systems that not only correct loudness but also predict how your track will sound in different listening environments. Imagine uploading a song and instantly hearing how it plays through car speakers, earbuds, or a Bluetooth speaker, with AI suggesting tweaks for each case.

We may also see AI tools integrated directly into streaming platforms. Instead of rejecting or altering tracks after they’re uploaded, services could provide real-time mastering help during the submission process. That would save artists even more time and frustration.

Conclusion: Let AI Take the Guesswork Out of Loudness

Loudness used to be a battlefield. Engineers pushed harder and harder until music was squashed, distorted, and fatiguing. Streaming services ended that war with LUFS targets and normalization. Now, thanks to AI, artists don’t have to play the guessing game of loudness anymore.

By using AI metering and loudness tools, you can make sure your tracks hit the right standards, sound consistent across platforms, and keep your artistic vision intact. You avoid rejection, save time, and create music that feels good to listeners.

At the end of the day, that’s what matters most. Not who’s the loudest, but who makes the music that connects. And AI is here to make sure your songs connect in the best way possible.

|  Spotify |  Deezer | Breaker |

Pocket Cast |  Radio Public |  Stitcher |  TuneIn |

IHeart Radio |  Mixcloud |  PlayerFM |  Amazon |

Jiosaavn |  Gaana | Vurbl |  Audius |

Reason.Fm | |||

Find our Podcasts on these outlets

Buy Us a Cup of Coffee!

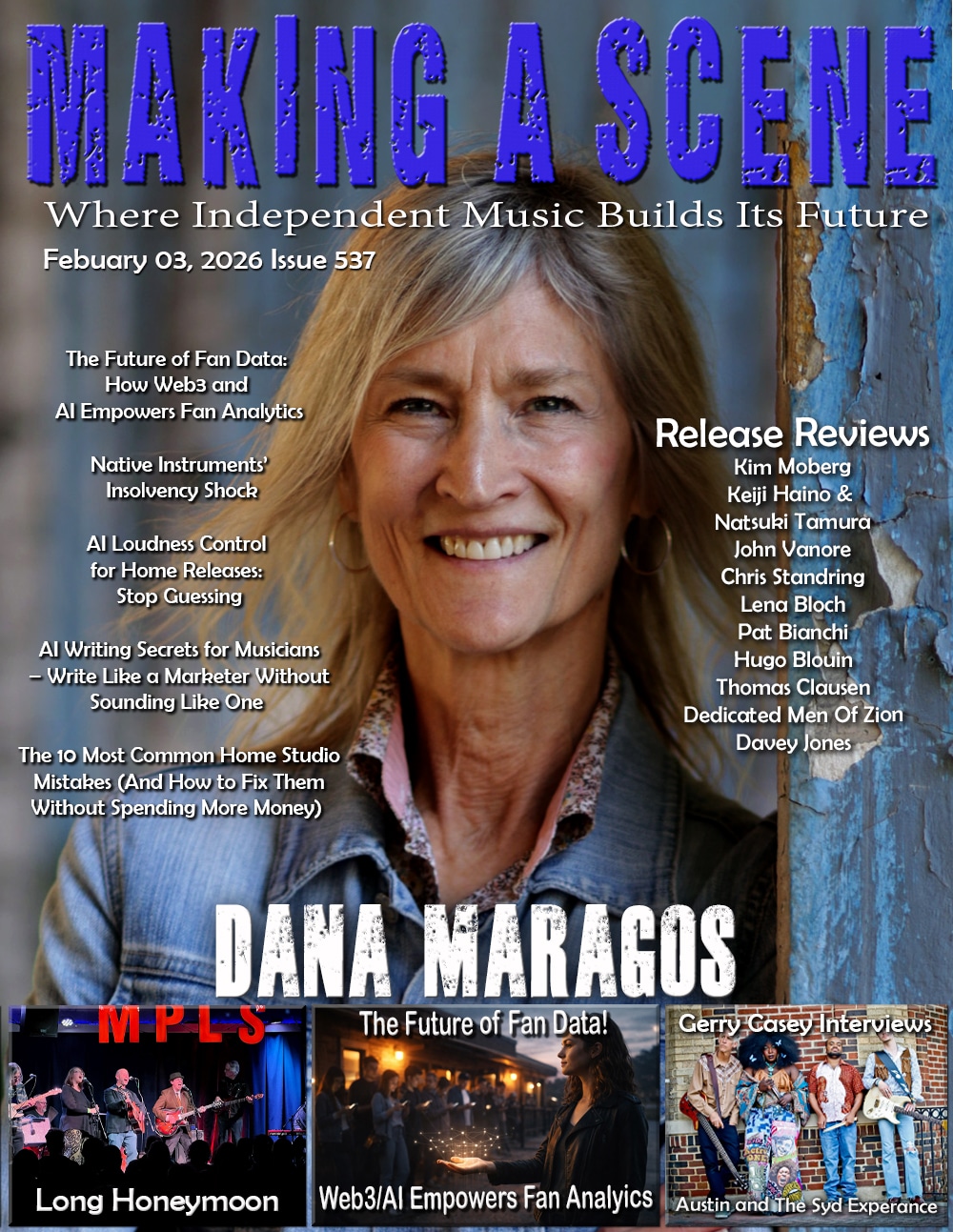

Join the movement in supporting Making a Scene, the premier independent resource for both emerging musicians and the dedicated fans who champion them.

We showcase this vibrant community that celebrates the raw talent and creative spirit driving the music industry forward. From insightful articles and in-depth interviews to exclusive content and insider tips, Making a Scene empowers artists to thrive and fans to discover their next favorite sound.

Together, let’s amplify the voices of independent musicians and forge unforgettable connections through the power of music

Make a one-time donation

Make a monthly donation

Make a yearly donation

Buy us a cup of Coffee!

Or enter a custom amount

Your contribution is appreciated.

Your contribution is appreciated.

Your contribution is appreciated.

DonateDonate monthlyDonate yearlyYou can donate directly through Paypal!

Subscribe to Our Newsletter

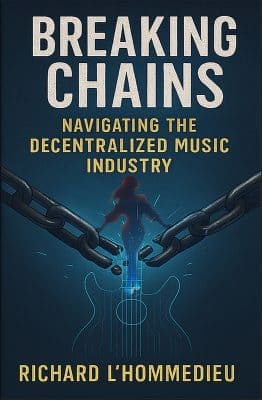

Order the New Book From Making a Scene

Breaking Chains – Navigating the Decentralized Music Industry

Breaking Chains is a groundbreaking guide for independent musicians ready to take control of their careers in the rapidly evolving world of decentralized music. From blockchain-powered royalties to NFTs, DAOs, and smart contracts, this book breaks down complex Web3 concepts into practical strategies that help artists earn more, connect directly with fans, and retain creative freedom. With real-world examples, platform recommendations, and step-by-step guidance, it empowers musicians to bypass traditional gatekeepers and build sustainable careers on their own terms.

More than just a tech manual, Breaking Chains explores the bigger picture—how decentralization can rebuild the music industry’s middle class, strengthen local economies, and transform fans into stakeholders in an artist’s journey. Whether you’re an emerging musician, a veteran indie artist, or a curious fan of the next music revolution, this book is your roadmap to the future of fair, transparent, and community-driven music.

Get your Limited Edition Signed and Numbered (Only 50 copies Available) Free Shipping Included

Discover more from Making A Scene!

Subscribe to get the latest posts sent to your email.