The Sentient Studio: How AI Learns Your Sound Over Time

Making a Scene – The Sentient Studio: How AI Learns Your Sound Over Time

Listen to the Podcast Discussion to Learn more about the future of the Sentient Studio!

There was a time when your digital audio workstation, or DAW, was nothing more than a glorified tape machine. You hit record, moved some faders, and hoped for the best. Every time you opened a new project, it felt like starting from scratch. Your EQ curves, compressor ratios, and reverb settings had to be dialed in again and again. You might have had a few saved presets, but the system itself didn’t know you.

Fast-forward to now, and that’s beginning to change. With the explosion of adaptive AI tools like iZotope Neutron 5, Sonible smart:EQ 4, and Ozone 12, your studio setup is beginning to listen. These plugins don’t just react to your mix—they learn from it. The more you work, the better they get at predicting what you’ll want next. Slowly but surely, your studio is becoming less like a toolbox and more like a partner.

We’re entering what might be called the sentient studio era—a world where your DAW starts to understand your preferences, your workflow, and even your sonic personality. Instead of treating your software as a static piece of gear, you’ll begin to think of it as a collaborator that evolves with you.

Listening Machines

Let’s start with what’s happening right now. The latest generation of “smart” plugins use a form of machine learning to analyze your audio, identify instruments, and make recommendations based on thousands of professional mixes.

iZotope Neutron 5 is one of the best examples of this shift. Its Learn function doesn’t just detect whether a track is a guitar or a vocal—it listens for spectral balance, dynamic range, and transients. Once it understands what’s there, it builds a customized processing chain that fits the source material. You can learn more or try a demo at www.izotope.com/en/products/neutron.html.

Sonible smart:EQ 4 works in a similar way but takes the concept of learning a bit further. Its system compares your recording to a vast internal database of reference signals. When you click the “Learn” button, it applies an adaptive EQ curve that aims to create balance and clarity without erasing the character of your recording. Over time, you can even feed it your own “profiles” so it learns the exact kind of tone you prefer. Visit www.sonible.com/smarteq4 to explore that concept.

Then there’s Ozone 12, the mastering suite that acts like a seasoned engineer who knows your entire catalog. Its AI assistant listens to the full mix, analyzes spectral balance and loudness, and then builds a chain of EQ, compression, limiting, and stereo enhancement tailored to your track. The fascinating part is how it remembers your past choices. If you consistently pull back the highs a touch or add warmth in the low mids, Ozone begins to recognize that pattern and quietly integrates it into future suggestions. You can see this evolution yourself at www.izotope.com/en/products/ozone.html.

Each of these tools does something subtle but profound: they adapt. They study your moves and build an internal model of what you consider a good mix.

When Your Tools Start To Remember

At first, this might sound like marketing hype, but it’s not. Every time you accept or reject an AI suggestion in Neutron 5, that data point becomes part of your personal learning curve. The software adjusts its future behavior accordingly. It’s not reading your mind—it’s remembering your taste.

For example, if you tend to scoop 250 Hz out of your rhythm guitars or add a gentle boost around 5 kHz to your vocals, those patterns start to appear in future sessions. Before long, Neutron’s “intelligent” EQ guesses that you’ll probably want that same treatment again, shaving minutes off your workflow.

Sonible takes this even further with what it calls custom learning profiles. You can train smart:EQ on your own finished mixes. Once it’s listened to a few of your tracks, it builds a sonic fingerprint—a sort of spectral average of your signature sound. When you open a new session, the plugin applies that fingerprint automatically. It’s like cloning your own ears inside the software.

This is the first step toward what we might call AI recall memory—where the machine begins to understand not just the technical aspects of your mix, but your aesthetic decisions too. It’s the difference between a student who can repeat instructions and one who actually learns the teacher’s style.

From Tool To Teammate

Think of your favorite assistant engineer. Over time, they learn your habits: how loud you like the snare, how you route your busses, what kind of compression you prefer on a vocal. After a few sessions, you don’t have to explain anymore—they just do it.

AI is starting to do the same thing.

Producer Tom Strahle once described his experience with Neutron’s Mix Assistant like this:

“At first, I thought the AI stuff was just a gimmick. But after a few projects, it felt like having an assistant who knew what I was going for before I even reached for a knob.”

Another engineer, Vinnie Colaiuta Jr., who frequently uses Sonible’s suite, put it this way:

“It’s not taking control—it’s learning control. I train it the same way I’d train an intern.”

That idea—training your tools—is at the core of the sentient studio. The more projects you feed your system, the more it refines its understanding of your sound. The relationship becomes circular: you shape the AI, and the AI shapes your workflow.

Before long, you start to notice something almost magical. The suggestions feel less generic and more “you.” The presets start to sound like your mixes. And instead of scrolling through endless settings, you spend your time actually creating.

When the DAW Knows You by Heart

Picture this: you open a new session in Studio One Pro or Logic Pro X. You import a vocal, and before you do anything, the DAW automatically loads your favorite chain—maybe a gentle compressor, a high-pass filter, a touch of saturation. It’s not pulling a random preset. It’s pulling your preset—the one it saw you build fifteen times before.

Now extend that concept further. Imagine your DAW noticing that every time you work on a blues track, you reach for the same tape emulation and a short plate reverb. Or that whenever you record acoustic guitar, you favor a high-shelf boost at 8 kHz. The next time you start a session labeled “Blues,” it could set that up for you before you even think to ask.

That’s not fantasy. Companies like PreSonus, Ableton, and Apple are already experimenting with adaptive templates and AI-assisted mix recall. Studio One’s “Smart Templates” are a taste of that future—sessions that open pre-configured for common workflows. It’s easy to imagine a version in which those templates evolve based on your personal history. Visit www.presonus.com/products/Studio-One to see where that’s heading.

Your DAW could become something closer to a co-producer—watching, remembering, and preparing before you do.

Cross-Project Intelligence

Right now, most smart plugins operate in their own little worlds. Neutron doesn’t talk to Ozone, and Sonible doesn’t exchange notes with Studio One. But the next leap will come when these systems start sharing data across platforms.

Imagine an AI hub that gathers information from all your sessions: which EQ curves you prefer, how often you use parallel compression, what your average LUFS target is, and which reverbs make it onto your final bounces. This hub could generate a master sound profile that informs every plugin you use.

You’d no longer have to think in terms of presets; you’d think in terms of identity.

For instance, your AI profile might learn that your vocal mixes are usually warm with controlled highs and gentle glue compression. The next time you record, every relevant plugin—from EQ to limiter—would initialize with those tendencies baked in. Your workflow becomes an extension of your personality.

This concept isn’t far off. Cloud-based systems already allow for user-specific settings to be recalled across devices. Integrating machine learning into that cloud memory is simply the next step.

The Role of Context and Emotion

So far we’ve talked about technical adaptation—EQ curves, compression ratios, and so on. But what about emotional adaptation?

That’s where companies like Cyanite.ai and Musiio come in. These platforms analyze music for mood, energy, and emotional tone. Cyanite, for instance, can tell whether a track feels “uplifting,” “melancholic,” or “cinematic.” Visit www.cyanite.ai to see its mood-mapping tools.

If a DAW integrated this type of emotional intelligence, it could automatically adjust your mix vibe based on intent. Working on a dreamy ambient track? Your AI could soften the transients, widen the stereo field, and add gentle reverb tails—all before you ask. Mixing an aggressive punk song? It could tighten the low end, enhance attack, and reduce ambience to keep things raw.

Over time, the DAW wouldn’t just remember your sound—it would understand your feel. It would know how you like your music to hit emotionally.

When the Machine Predicts the Musician

Here’s where things start to get spooky, in the best possible way. Once your studio learns your patterns, it can begin to anticipate your next moves.

Say you import a vocal labeled “Lead Vox.” The AI has seen you notch 200 Hz from this type of track dozens of times. It automatically applies that cut, plus a subtle de-esser and a compressor at your usual ratio. You didn’t even touch a knob.

That’s predictive mixing—and it’s coming sooner than you think.

The key advantage here isn’t laziness; it’s speed. Every artist has creative momentum, and technical tasks can interrupt that flow. When your DAW predicts your moves, it keeps you in the zone. You stay focused on the song, not the settings.

Producer Erin Frayne, who beta-tested early versions of iZotope’s AI assistants, described it like this:

“After six months with Neutron and Ozone, I realized they were doing what my brain already wanted to do. It’s like my DAW learned my taste.”

That realization captures the essence of a sentient studio—it doesn’t replace intuition; it mirrors it.

Collaboration, Not Replacement

Some musicians still fear that AI will take their jobs, but that’s misunderstanding the relationship. AI isn’t here to replace the engineer any more than a calculator replaced the mathematician. It just handles the tedious parts faster.

By letting AI manage gain staging, spectral balance, and repetitive tasks, you free your human brain for creativity—the one thing machines can’t fake. The more your studio learns, the less you have to think about workflow, and the more you can focus on vibe.

A great analogy comes from film colorists. Early color-grading software could auto-balance shots, but the artistry still came from the human who decided how the final scene should feel. Audio AI works the same way: it handles the groundwork so you can chase the emotion.

Your role shifts from technician to curator. Instead of twisting every dial, you guide the direction. The AI becomes your silent assistant, setting the stage for your decisions.

Building a Relationship with Your Studio

If you think of your DAW as a living thing, then each session is a conversation. Every EQ cut, compressor tweak, and effect choice tells your AI who you are. The more honestly you work, the more accurately it learns.

Over months or years, your DAW could evolve a detailed map of your preferences: how you like drums panned, how you treat the stereo bus, what dynamics feel right. When you open a new project, it’s as if you’re picking up a conversation where you left off.

This kind of relationship turns the studio into a feedback loop of growth. As you get better, your AI gets better too. If you start exploring jazz instead of rock, your mix patterns change, and the AI adapts. It’s an ecosystem, not a snapshot.

Technical Evolution Behind the Curtain

Underneath the friendly interface, what’s really happening is pattern recognition powered by machine-learning algorithms. Each plugin collects data about your decisions—parameter changes, plugin order, gain levels—and stores them locally or in the cloud. Over time, statistical models identify the combinations that recur.

When you open a new session, those models generate predictions. They don’t have emotions or opinions; they simply calculate probabilities based on your past. If you always cut low mids on vocals, that move becomes statistically likely.

The science might be invisible, but its effects are audible. Mixes start faster, balances come together sooner, and your creative decisions feel more natural. The machine becomes part of your muscle memory.

From Templates to Training Data

Traditional engineers relied on templates—preset signal chains and bus routings—to save time. Adaptive AI takes that same idea and supercharges it. Instead of fixed templates, you have evolving ones that refine themselves with every session.

You could think of your mix history as training data. Each project is another data point that helps your studio understand what works. As the dataset grows, predictions become more accurate. Eventually, the system might even notice flaws before you do—suggesting a noise reduction step, detecting frequency masking, or recommending a reference track that matches your tone.

This dynamic learning process is what separates “smart” plugins from truly intelligent ones. It’s the shift from remembering settings to understanding style.

A Glimpse Into Tomorrow

So what will the sentient studio look like five years from now? Imagine opening your DAW and being greeted by a message that says:

“Hey, looks like you’re working on a funk project. I’ve set up your usual rhythm-section chain, tuned the compressors for a punchier feel, and created a mix bus that matches your previous single.”

You smile, hit record, and dive in.

Your studio could analyze your Spotify stats or fan data from platforms like Chartmetric or Cyanite.ai to understand what your listeners love most about your sound. It might subtly nudge your mix toward those frequencies or dynamics. It could even recommend mastering targets based on the streaming service you plan to release on.

You’d still make the final decisions, but your DAW would handle the grunt work—balancing, routing, referencing—so you could focus on artistry. The boundaries between creative and technical would blur until they’re almost indistinguishable.

That’s the promise of a truly adaptive DAW: a system that grows with you, learns your signature, and eventually becomes your personal engineer.

Ethics and Ownership of Your Sound

As exciting as this sounds, it raises some big questions. Who owns the data that represents your sonic fingerprint? If your DAW uploads that data to the cloud, is it yours or the company’s? What happens if an AI trained on your mixes ends up influencing other users?

These are new frontiers for musicians and developers alike. Transparency will be crucial. Artists should have control over whether their personal sound profiles stay private or contribute to broader datasets. The future of AI in music must protect individuality, not homogenize it.

But if handled responsibly, this evolution could be the most empowering shift since multitrack recording. Instead of fighting your tools, you’ll be teaching them. Instead of adapting to technology, technology will adapt to you.

Human Touch in a Machine World

No matter how advanced the algorithms become, they’ll never replace human emotion. The imperfections, spontaneous choices, and emotional responses that define a performance can’t be coded.

That’s why the smartest use of AI in the studio isn’t automation—it’s augmentation. The best producers will be those who treat AI as an extension of their creativity, not a crutch. When you let the machine handle the repetitive stuff, you’re free to chase the goosebumps moments—the parts that make a song unforgettable.

The sentient studio doesn’t take the soul out of music; it helps you focus on it.

Try It Yourself

If you want to experience this shift firsthand, start with these platforms:

-

iZotope Neutron 5 – https://www.izotope.com/en/products/neutron.html

-

iZotope Ozone 12 – https://www.izotope.com/en/products/ozone.html

-

Sonible smart:EQ 4 – https://www.sonible.com/smarteq4

-

Cyanite.ai – https://www.cyanite.ai

-

Musiio – https://www.musiio.com

-

PreSonus Studio One Pro – https://www.presonus.com/products/Studio-One

Load them up, experiment, and pay attention to how they respond to you. Notice how each project feels a little more familiar than the last. That’s the sound of your studio learning.

|  Spotify |  Deezer | Breaker |

Pocket Cast |  Radio Public |  Stitcher |  TuneIn |

IHeart Radio |  Mixcloud |  PlayerFM |  Amazon |

Jiosaavn |  Gaana | Vurbl |  Audius |

Reason.Fm | |||

Find our Podcasts on these outlets

Buy Us a Cup of Coffee!

Join the movement in supporting Making a Scene, the premier independent resource for both emerging musicians and the dedicated fans who champion them.

We showcase this vibrant community that celebrates the raw talent and creative spirit driving the music industry forward. From insightful articles and in-depth interviews to exclusive content and insider tips, Making a Scene empowers artists to thrive and fans to discover their next favorite sound.

Together, let’s amplify the voices of independent musicians and forge unforgettable connections through the power of music

Make a one-time donation

Make a monthly donation

Make a yearly donation

Buy us a cup of Coffee!

Or enter a custom amount

Your contribution is appreciated.

Your contribution is appreciated.

Your contribution is appreciated.

DonateDonate monthlyDonate yearlyYou can donate directly through Paypal!

Subscribe to Our Newsletter

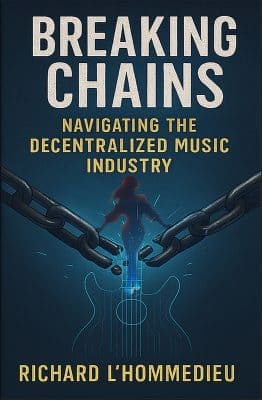

Order the New Book From Making a Scene

Breaking Chains – Navigating the Decentralized Music Industry

Breaking Chains is a groundbreaking guide for independent musicians ready to take control of their careers in the rapidly evolving world of decentralized music. From blockchain-powered royalties to NFTs, DAOs, and smart contracts, this book breaks down complex Web3 concepts into practical strategies that help artists earn more, connect directly with fans, and retain creative freedom. With real-world examples, platform recommendations, and step-by-step guidance, it empowers musicians to bypass traditional gatekeepers and build sustainable careers on their own terms.

More than just a tech manual, Breaking Chains explores the bigger picture—how decentralization can rebuild the music industry’s middle class, strengthen local economies, and transform fans into stakeholders in an artist’s journey. Whether you’re an emerging musician, a veteran indie artist, or a curious fan of the next music revolution, this book is your roadmap to the future of fair, transparent, and community-driven music.

Get your Limited Edition Signed and Numbered (Only 50 copies Available) Free Shipping Included

Discover more from Making A Scene!

Subscribe to get the latest posts sent to your email.