Emotion-Driven Mixing: How AI Reads Feelings to Shape the Sound

Making a Scene Presents – Emotion-Driven Mixing: How AI Reads Feelings to Shape the Sound

Listen to the Podcast Discussion to Gain More Insight Into Mixing for the Mood

There’s a quiet revolution happening in the studio, and it has nothing to do with new mics or fancy compressors. It’s about something deeper. For the first time ever, we have AI tools that can actually read the emotional tone of music. Not just the key, tempo, or waveform shape. I’m talking about mood, feeling, energy, and intensity. This is called emotion-driven mixing, and it’s changing everything for indie artists, bedroom producers, and even film scorers who need to tell a story through sound.

The wild part is that this idea used to sound like science fiction. Nobody believed a machine could listen to a track and say, “Hey, this chorus feels hopeful but the drums are mixed too dark.” But here we are. Platforms like Cyanite at https://www.cyanite.ai and Musiio at https://www.musiio.com already analyze emotion and mood for labels and publishers. Now this same tech is coming into the mixing room, the scoring studio, and even your laptop bedroom setup.

This article breaks down how AI reads feelings right from the waveform, how that information shapes mix decisions, how it works for film scoring, and where this whole thing is going. And we’ll keep it simple, real, and useful for indie artists who want to take back control from the gatekeepers.

The Rise of “Emotional AI” and Why It Matters

For decades, mixing was about balancing technical things like EQ, compression, reverb, panning, and loudness. But music isn’t just technical. It’s emotional. A sad ballad needs space. An angry punk track needs impact. A hopeful pop chorus needs lift. Every feeling has a sound, even if nobody teaches you that in school.

Labels have known this for years, which is why they spent huge money on emotional analytics to see how songs “felt” to listeners. Now the same tech is available to indie artists for free or cheap. Tools like Cyanite and Musiio break down tracks into emotional tags like warm, dark, angry, joyful, mellow, epic, anxious, or dreamy. They read the emotion using trained machine learning models that compare your track to millions of songs.

Cyanite at https://www.cyanite.ai is used by Universal and Sony to tag huge catalogs. Musiio at https://www.musiio.com powers AI discovery for Beatport and others. They look at your waveform, chord movement, tempo, spectral balance, dynamic range, and even how vocals sit in the mix. The AI then maps those signals onto emotional “clusters.” These clusters are based on how real people react to sound.

That’s where things get interesting. When AI understands the emotion behind your track, it can start helping you shape the sound to amplify that emotion. Suddenly the mix becomes more than EQ and compression. It becomes emotional storytelling.

How AI Reads Emotion Inside the Waveform

To understand emotion-driven mixing, you have to know what AI is actually looking at inside your audio. When tools like Cyanite or Musiio listen to a song, they break the sound into slices and measure things like brightness, punch, space, texture, motion, and harmonic richness.

A bright tone often reads as happy or energetic. A darker tone often reads as sad or intimate. A wide stereo field feels open. A narrow field feels lonely. High compression feels aggressive. Soft dynamics feel calm. Even the rhythm and gate shape of the vocal breaths matter.

The AI also reads vocal delivery. Sharp transients can feel angry or powerful. Soft attacks feel tender. And when the AI sees certain combinations of tones, it recognizes them as emotional signatures it has seen thousands of times.

Musiio uses deep neural networks trained on massive music datasets. Cyanite mixes deep learning with human-rated emotional feedback. You can upload any track, even a rough demo, and it will spit back emotional metadata in seconds.

That emotional data is gold. It becomes the roadmap you use to shape your mix so the feeling hits harder.

When AI Tells You Your Song Feels Something You Didn’t Expect

Here’s where the rebel inside every indie artist wakes up. Sometimes you upload your demo and the AI says it feels “sad” when you wanted it to feel “powerful.” Or it says your chorus feels “calm” when you wanted it to lift. That’s not an insult. It’s insight.

Emotion-driven mixing exposes things your ears didn’t notice. Maybe the drums are too soft. Maybe the vocal reverb is too dark. Maybe the guitars are swimming in too much space. Maybe the bass is too warm and smooth for the aggressive vibe you’re chasing.

When AI gives you emotional tags, it’s like holding a mirror up to your mix. And you can choose what to do with that reflection. You’re not giving up creative control. You’re sharpening it.

Matching the Mix to the Mood: Where AI Steps In

This is where things get powerful. When you know the emotion you want, and the AI knows the emotion you currently have, the mix becomes a conversation between you and the machine.

If you want more energy but the AI says your track is mellow, you push brightness, attack, and saturation. If you want something more intimate, you pull the drums back, warm the midrange, and dry up the vocals. AI helps you hit the vibe faster because it points out the gap between intention and reality.

Tools like iZotope Neutron 5 at https://www.izotope.com and Ozone 12 at https://www.izotope.com can already match tonal curves to reference tracks using AI. But emotion-driven mixing takes this a step further. Instead of matching another song, it matches a feeling.

Sonible’s smart:EQ and smart:comp at https://www.sonible.com use AI to understand tone and dynamics. When you combine that with emotional metadata from Cyanite or Musiio, you get a mix that is tuned to the emotional core of your music.

How Emotion Tagging Could Automate Real Mix Decisions

Now let’s talk near-future. Imagine uploading your demo into Neutron or Ozone and telling the AI, “Make it feel more hopeful.” Or, “This section needs more tension.” The AI would study the emotional metadata, then adjust warmth, reverb, brightness, and compression to match the vibe.

A sad verse might trigger darker tones, slower dynamics, and wider space. A joyful chorus might trigger more brightness, more harmonic excitement, and tighter glue compression.

Emotion-driven mixing could become like having a film scoring assistant inside your DAW that shapes the mood for you in real time. This isn’t fantasy. This is the direction everything is heading.

The AI Film Scoring Side: Music That Follows the Feelings on Screen

Film scorers have been chasing emotion for a hundred years. Every cue is tied to a character’s emotional arc. Now AI is stepping into this world too.

Tools like AIVA at https://www.aiva.ai and Amper at https://www.shutterstock.com/ai/music create original music from emotional prompts. But even bigger is the rise of affective computing in scoring software. Affective computing means machines that can read emotion from signals like video, audio, facial movement, or scene color.

Imagine scoring a scene where a character is heartbroken. The AI reads the actor’s face, the lighting, the pacing, and the dialogue tone. It identifies sadness, tension, or hope. Then it suggests chords, textures, and instruments that fit the emotional shape of the moment.

There are early examples already happening. Magenta’s Tone Transfer project at https://magenta.tensorflow.org can turn one sound into another with expressive tone. Neural Score engines are coming that will suggest orchestration moves based on scene mood.

This doesn’t replace human composers. It frees them. Instead of spending hours guessing the emotional center of a scene, they get instant emotional direction from the machine. It lets small indie film composers punch way above their budget.

How Indie Producers Can Use Emotion-AI for Their Own Songs

You don’t need a Hollywood scoring gig to use emotional AI. As an indie artist, you can treat every song like a scene in a movie. Your verse might be the lonely moment. Your chorus might be the moment of triumph. Your bridge might be the breakdown. When you think of your song in emotional arcs, AI becomes a partner in building the story.

Upload your demo to Cyanite or Musiio. See what emotions it returns. Then use Neutron 5, Ozone 12, smart:EQ, smart:comp, or even something like Waves Clarity VX at https://www.waves.com to sculpt the emotional tone.

Maybe you want a warmer vocal for an intimate feel. Maybe the guitars need more edge for a rebellious vibe. Maybe the drums need more compression for excitement. Everything becomes easier when you know exactly what feeling you’re chasing.

This is how you stop mixing in circles. This is how you stop guessing. You get a clear emotional target, and you fire straight at it.

How Emotion-Driven Mixing Helps with Mastering

Mastering used to be about loudness and clarity. But now even mastering engineers are starting to use emotional AI.

Ozone 12’s AI assistant analyzes tone and dynamic shape. Upcoming AI mastering engines are starting to look at emotional metadata too. If the track is supposed to feel peaceful, the mastering may keep softer transients and smoother highs. If the track is supposed to feel powerful, it may add transient punch and boost the upper mids.

This opens the door for indie artists to create masters that feel more alive and intentional. You don’t need a million-dollar room to master with emotion. You need emotional feedback and the right AI-powered tools.

The Near Future: When Your DAW Becomes Your Emotional Co-Producer

Here’s where things get exciting. Imagine Studio One, Logic, Cubase, or Pro Tools with a built-in emotional AI that listens as you work. It hears your draft mix, reads your lyrics, watches your dynamics, and gives suggestions.

The AI might say, “Your chorus feels uplifting but your drums are too dark.” Or, “Your verses feel lonely so try a smaller reverb.” Or, “Your bridge feels tense, so tighten the compression on the guitars.”

This isn’t replacing you. It’s amplifying you. It’s like having an emotional co-producer who never gets tired and never loses the vibe.

Now imagine the same thing inside film scoring tools. The scene plays. The AI reads facial emotion, color tone, pacing, shot type, and dramatic arc. It then suggests a harmonic palette. Maybe the strings should swell earlier. Maybe the bass should drop out in the moment of confusion. The AI follows the emotional flow like a second brain.

This is the future. And indie creators will benefit the most.

Why Big Corporations Fear This and Why Indie Artists Should Embrace It

Major labels and film studios have always controlled emotional measurement. They used it to decide which songs to push, which singers to sign, which scenes needed rescoring, which demos felt “marketable.”

Now indie artists have access to the same power for almost nothing.

Cyanite costs less than a dinner out. Musiio can be used through partner platforms. Neutron 5, Ozone 12, and Sonible’s tools are cheap compared to studio sessions. This puts emotional control back into your hands, not in the hands of gatekeepers who never cared about your art to begin with.

Emotion-driven mixing lets you shape the sound of your music so it hits harder, tells the truth faster, and reaches listeners more deeply. You don’t need a label telling you what your music should “feel like.” You can measure the feeling yourself and dial it in like a rebel scientist.

How Emotion-AI Changes the Role of the Producer

Producers used to be the emotional translators. Artists described what they wanted, and producers turned that into sound. Now emotional AI helps bridge that communication gap.

Imagine telling the AI, “Make this feel like rain hitting a window.” Or, “Make this chorus open up like a sunrise.” The AI would translate poetic emotion into tonal moves. Producers will still guide the ship, but AI will give them more emotional accuracy, faster.

This pushes producers to be more creative and less technical. The emotional choices become the main job. The machine handles the boring parts.

This also means indie producers with small setups can deliver mixes that feel huge, cinematic, and emotionally rich without needing expensive gear.

The Film Composer’s Secret Weapon: Affective Scoring AI

Film composers are already experimenting with emotional AI that reads video. Imagine a character walking through a dark hallway. The AI sees fear. It suggests low drones, slow strings, and soft percussion. Then the actor finds a door to safety. The AI detects relief. It suggests a harmonic lift. The composer then fine-tunes everything.

Projects like DeepFaceEmotion and Affectiva AI show that machines can already read facial emotion better than most humans. Now composers can use these signals inside scoring engines to match the scene feel instantly.

Tools like Soundraw at https://www.soundraw.io and Boomy at https://www.boomy.com already let you create music from emotional prompts. It won’t be long before DAWs like Cubase or tools like Kontakt integrate emotional scoring assistants that move with the scene like a living organism.

This lowers the barrier for indie filmmakers, indie scorers, and students who don’t have huge budgets. Suddenly, anyone can score a scene with emotional accuracy, even in a bedroom studio. That’s a threat to the old system, which is exactly why it’s exciting.

AI Doesn’t Replace Creativity. It Replaces Guessing.

Let’s kill the biggest myth right now. AI isn’t here to replace musicians. It’s here to wipe out the guesswork. Emotion-driven mixing doesn’t tell you what music to make. It helps you shape the music you already made in a way that matches the story in your head.

A machine can’t invent your heartbreak. A machine can’t invent your joy. But it can help you build the sound world that expresses it.

Emotion-AI is basically an emotional translator. You feed it your feelings. It spits back suggestions. You stay in control. You stay the artist. The machine becomes the assistant.

The Step-By-Step Emotional Workflow

Here is what a full emotion-driven workflow looks like when you blend music production and film scoring.

You write a song. You create a rough demo. You upload it to Cyanite or Musiio. The AI tells you the emotion it hears. You compare that to the emotion you want. You use Neutron 5, Ozone 12, smart:EQ, and smart:comp to dial in the difference. You use creative choices to push certain feelings up front. You master the track with emotional intention.

If you’re scoring film, you upload the scene to a scoring tool that reads emotional cues from the actors, the lighting, the pacing, and the motion. The AI suggests a musical foundation. You shape the score on top of it.

In both cases, emotion becomes the North Star. AI becomes the compass. You become the captain steering the ship. And nobody else gets to tell you what your music should feel like.

Where All of This Is Heading

Emotion-driven mixing is still new, but it’s growing fast. Every DAW is adding more AI. Every mastering engine is becoming emotion-aware. Scoring software is becoming mood-adaptive. Soon your session will feel alive. Your mix tools will talk back. Your scoring tools will react to scenes in real time.

Indie artists who embrace this now will be way ahead of the game. Indie film scorers who understand emotional AI will land bigger gigs because they can deliver emotional depth faster. This is the beginning of a new era where technology listens to you instead of the other way around.

The big studios won’t like it. Too bad. The power belongs to creators now.

Final Thoughts: The Future of Emotion Is DIY

Emotion-driven mixing brings power back to the artists who actually care about the music. Machines don’t feel anything, but they can help you shape the sound of your feelings. That’s the beauty of it. You keep the humanity. AI gives you the tools.

If you’re an indie artist, this is your moment. Use the emotion engines. Use Cyanite at https://www.cyanite.ai. Use Musiio through https://www.musiio.com. Use Neutron 5 and Ozone 12 from https://www.izotope.com. Use Sonible smart tools at https://www.sonible.com. Use AIVA and scoring engines to match film scenes.

Let the old music industry keep guessing. You’re building the future by knowing exactly how your music feels and shaping it into something unforgettable.

And that’s what emotion-driven mixing really is. It’s not computers stealing art. It’s artists taking back control.

|  Spotify |  Deezer | Breaker |

Pocket Cast |  Radio Public |  Stitcher |  TuneIn |

IHeart Radio |  Mixcloud |  PlayerFM |  Amazon |

Jiosaavn |  Gaana | Vurbl |  Audius |

Reason.Fm | |||

Find our Podcasts on these outlets

Buy Us a Cup of Coffee!

Join the movement in supporting Making a Scene, the premier independent resource for both emerging musicians and the dedicated fans who champion them.

We showcase this vibrant community that celebrates the raw talent and creative spirit driving the music industry forward. From insightful articles and in-depth interviews to exclusive content and insider tips, Making a Scene empowers artists to thrive and fans to discover their next favorite sound.

Together, let’s amplify the voices of independent musicians and forge unforgettable connections through the power of music

Make a one-time donation

Make a monthly donation

Make a yearly donation

Buy us a cup of Coffee!

Or enter a custom amount

Your contribution is appreciated.

Your contribution is appreciated.

Your contribution is appreciated.

DonateDonate monthlyDonate yearlyYou can donate directly through Paypal!

Subscribe to Our Newsletter

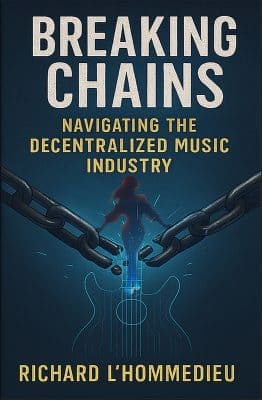

Order the New Book From Making a Scene

Breaking Chains – Navigating the Decentralized Music Industry

Breaking Chains is a groundbreaking guide for independent musicians ready to take control of their careers in the rapidly evolving world of decentralized music. From blockchain-powered royalties to NFTs, DAOs, and smart contracts, this book breaks down complex Web3 concepts into practical strategies that help artists earn more, connect directly with fans, and retain creative freedom. With real-world examples, platform recommendations, and step-by-step guidance, it empowers musicians to bypass traditional gatekeepers and build sustainable careers on their own terms.

More than just a tech manual, Breaking Chains explores the bigger picture—how decentralization can rebuild the music industry’s middle class, strengthen local economies, and transform fans into stakeholders in an artist’s journey. Whether you’re an emerging musician, a veteran indie artist, or a curious fan of the next music revolution, this book is your roadmap to the future of fair, transparent, and community-driven music.

Get your Limited Edition Signed and Numbered (Only 50 copies Available) Free Shipping Included

Discover more from Making A Scene!

Subscribe to get the latest posts sent to your email.