AI for Session Musicianship: Tightening, Not Replacing

Making a Scene Presents – AI for Session Musicianship: Tightening, Not Replacing

Listen to the Podcast Discussion to gain more Insight into Using AI to help Tighten up your Recordings

There’s this fear floating around studios lately — that artificial intelligence is coming to take the jobs of session musicians, singers, producers, even mixing engineers. The truth? AI isn’t here to replace you. It’s here to help you sound tighter, cleaner, and more confident in the studio. It’s a tool, not a takeover.

Think of it like a really smart assistant engineer who never sleeps, never gets cranky, and can instantly fix that one snare hit you missed without changing the soul of your performance. The magic happens when you use AI to enhance what you played — not to erase it.

Let’s dig into how that works.

The Snare Story: When AI Becomes Your Assistant Engineer

Every drummer knows the feeling. You lay down a killer take — the groove feels perfect, the fills are on point, and the energy is real. But then you listen back and notice a few snare hits that don’t quite pop. Maybe the mic was a little off-axis, or you lost some power on a ghost note. You don’t want to re-record the whole thing, but you also don’t want to leave it sounding uneven.

That’s where AI-powered drum replacement tools come in — and one of the best examples is Slate Trigger 2 by Steven Slate Audio. It’s practically an industry standard for tightening up drum tracks while keeping them human. Trigger 2 uses advanced transient detection and velocity-sensitive layering so it only replaces or reinforces hits that actually need help.

When you load Trigger 2 onto your snare track, it analyzes your recording in real time. You can tell it to layer new samples over your weak hits, or fully replace them — but the key is control. You decide how much of the original tone stays and how much reinforcement you add. If you just need to make your softer hits a little punchier, you can blend in Slate’s world-class snare samples until it feels right without losing your drummer’s touch.

The coolest part is how natural it sounds. Because Trigger 2 reads dynamics and bleed from other mics, it understands ghost notes, flams, and off-beat accents. You get consistency without that lifeless, machine-gun sound older trigger plugins used to create.

Other tools like Waves InTrigger and UVI Drum Replacer do similar things using machine learning, but Slate Trigger 2 stands out for its realism. It’s built from years of studio experience with real drummers, so it knows how to feel like a performance, not a robot.

Imagine your snare take as a live performance enhanced by a studio assistant. The AI and sample layers act like a smart compressor and tone shaper — tightening your hits just enough to make them pop in the mix, without changing your timing or tone. It’s still you playing — your groove, your swing, your touch. The technology just helps you translate that energy perfectly onto the record.

So next time you’re mixing drums and hear a weak snare or two, don’t panic. Load up Slate Trigger 2, let it detect your transients, and choose a sample that complements your snare. You’ll keep the feel of the take but give it the polish of a world-class recording.

Doubling Vocals: Two Takes or One Smart Plugin?

Let’s jump to the vocal booth. Vocal doubling has been a staple trick for decades. You record your main vocal, then sing the same thing again to thicken it up and make it pop in the mix. When done right, it sounds wide, rich, and powerful. When done wrong, it’s messy and phasey. And let’s be real — not every singer has the patience (or the time) to record perfect doubles for every song.

That’s where AI steps in again.

There are tools now that can take a single vocal and generate a realistic double that sounds like a second take. One of the most famous examples is Waves Abbey Road Reel ADT — modeled after the same system the Beatles used for artificial double tracking back in the day. It adds just enough variation in pitch, timing, and tone to make it feel like a new performance, not just a copy-and-paste clone.

But the AI game has evolved way past that. SoundID VoiceAI by Sonarworks can now actually generate backing vocals from a single performance. You can take one strong vocal track and have the software create harmonies above or below it that sound convincingly human. You can even choose the number of voices, the gender tone, and the style.

That means you could record one expressive lead take, then use AI to create natural-sounding doubles and harmonies that match your phrasing and tone exactly. No need to bring in extra singers or spend all day stacking layers.

And before you think it sounds fake — it doesn’t, if you use it right. The best results happen when your original performance already has energy and emotion. AI can’t invent soul. But it can extend it.

You can start by doubling your main vocal using a plugin like Abbey Road Reel ADT, then use VoiceAI or similar tools to add third and fifth harmonies. Pan the harmonies slightly left and right, leave the main vocal centered, and you’ll get that lush, full sound you hear on pro records — but all from one singer.

What’s cool is that when you do this a few times, you start to learn how harmonies actually work. You hear how certain intervals blend, how timing affects the feel, and how to layer vocals effectively. That’s the beauty of AI in the studio — it doesn’t just help you make better music. It teaches you how to think like a producer.

Creating Vocal Harmonies the Easy Way

If you’ve ever tried to stack harmonies manually, you know it’s not easy. You might have to figure out the right key, sing a third above or a fifth below, and hope you stay in tune across takes. AI tools take away the guesswork.

With something like SoundID VoiceAI, you can literally tell it what harmonies to create — “third above,” “fifth below,” “octave down” — and it’ll generate the parts instantly. Because it’s analyzing your main vocal, the phrasing and timing match perfectly.

That’s a game-changer for indie artists or small studios. You can go from a dry lead vocal to a full harmony stack in minutes. It’s not cheating. It’s efficiency. You’re still the performer, still the songwriter, still the voice — the AI is just helping you translate your ideas faster.

It’s the same way a MIDI keyboard helps a composer get notes down faster than writing by hand. You don’t question the keyboard. You just use it to create.

Bass Tightening: Locking in Without Losing the Groove

If the drummer is the heartbeat of a track, the bass player is the glue. Together, they make or break the groove. When those two lock in perfectly, the whole track breathes. But even great players can drift a few milliseconds early or late. That’s where AI comes in again — not to replace the bass player, but to help them stay locked to the drums in post.

Modern tools use intelligent transient detection to analyze both kick and bass tracks. They identify the attacks, compare them, and nudge the bass notes just enough to tighten the pocket. Some plugins even “listen” to the kick pattern and suggest where the bass should hit to maximize groove and low-end clarity.

Imagine you record a killer bass line. It feels great, but when you listen back, you notice a few notes don’t sit perfectly with the kick drum. Instead of manually sliding each note, you can use an AI tool to tighten it subtly — keeping your tone and dynamics, but giving the track more punch.

This doesn’t make your playing robotic. In fact, the opposite happens. Because you can instantly hear what “locked-in” feels like, your brain starts to internalize that groove. You play tighter on your next take without even realizing it. AI becomes a teacher.

This is especially powerful when paired with smart mixing tools like iZotope Neutron 5, which can analyze your whole mix and tell you where frequencies or timing might be clashing. You learn how the kick and bass should interact sonically and rhythmically, which is something even many seasoned producers take years to master.

The end result? A mix that feels glued together — tight, groovy, and professional — without ever touching a quantize button or sucking the life out of your bass part.

AI as a Production and Mixing Mentor

One of the biggest advantages of using AI in the studio isn’t just about fixing things. It’s about learning. Tools like iZotope Ozone 12 for mastering or Waves Clarity VX for vocal cleanup don’t just make your music sound better — they show you how.

When you drop a mix into Ozone’s AI mastering assistant, it listens to your track, compares it to thousands of professional masters, and applies EQ, compression, limiting, and stereo adjustments. But here’s the cool part: you can open the interface and see every move it made. You see which frequencies were boosted, which dynamics were controlled, and how loudness targets were achieved.

Over time, that knowledge becomes second nature. You start recognizing what your mixes need before you even load the AI plugin. You begin thinking like a mastering engineer — not because the AI replaced you, but because it trained you through repetition and feedback.

The same goes for mixing assistants. When you use Neutron’s Mix Assistant, it suggests level balances and EQ curves based on what it “hears” in your session. Instead of blindly following, you can listen critically and ask yourself why it made those choices. Why did it cut 200 Hz on the guitar? Why did it boost 5 kHz on the vocal? The more you study its decisions, the better your own mixing instincts become.

In a way, AI turns every session into a lesson. You’re not just making music — you’re learning the science behind what makes it sound good.

The Human Touch: What AI Can’t Replace

Now, let’s get real. For all its power, AI still can’t replicate one crucial ingredient: feel. That’s the heartbeat of musicianship — the slight push and pull in tempo, the emotion in a vocal phrase, the unique attack of a pick on strings, the way a drummer leans back on the groove just enough to make it swing.

AI can analyze timing, pitch, and tone, but it can’t feel your intention. It doesn’t understand heartbreak, excitement, or the buzz of a live take where everyone’s in the pocket. That’s why the best producers and artists don’t use AI to make “perfect” music — they use it to make better music.

The goal isn’t perfection. It’s polish.

When AI replaces a weak snare hit, doubles your vocals, or tightens your bass, it’s helping your humanity shine through clearer. It’s cleaning the window, not changing the view.

This is where so many people misunderstand what AI in music is about. It’s not some robotic system making generic pop songs (though those exist). It’s a suite of powerful studio tools designed to let musicians spend less time fixing and more time creating.

The irony is that the more you use AI correctly, the more human your records sound — because you’re spending more energy on emotion, tone, and story, not alignment and EQ.

When AI Teaches You How to Hear

Something fascinating happens when you spend enough time with these tools. You start to develop a better ear. When an AI plugin corrects your EQ balance or enhances your snare, you begin to notice why it sounds better. You start identifying problem frequencies by ear. You start predicting what the AI will do before it does it.

That’s growth.

A young producer might use Ozone and think, “Wow, that sounds so much more open!” An experienced producer might look deeper and realize it boosted 10 kHz by 1.5 dB and added a subtle multiband compressor at 200 Hz. Over time, you start making those choices yourself. The AI helped train your instincts.

In that way, these tools act like the mentors we all wish we had in the studio — patient, precise, and always available.

You could almost say AI makes musicianship smarter. Not mechanical — but informed.

How It All Comes Together

Picture this: you’re in your home studio. The drummer tracks live using real mics. A few snare hits are soft, but that’s easy — you run UVI Drum Replacer or Slate Trigger 2 Platinum and give them just a bit more punch. The bassist nails the groove but drifts slightly on a few fills — no problem, iZotope Neutron’s transient detection helps align those spots. The vocalist gives one passionate take — you use SoundID VoiceAI to create doubles and harmonies, then run Waves Abbey Road Reel ADT to widen the sound. Finally, you send the mix to Ozone 12, which helps you match loudness and tonal balance to your favorite reference track.

In the end, every note and every emotion still came from real musicians. The tools just helped you deliver it in the best light possible.

This is what modern session musicianship looks like — a partnership between human feel and machine precision. You still need to play, sing, and perform. You still need to make artistic decisions. But now you can do all of that faster, cleaner, and with more confidence than ever.

Final Thoughts: The Artist Is Still the Heart

If there’s one thing to take away, it’s this: AI doesn’t make you less of a musician. It helps you become a better one.

It lets you focus on what matters — creativity, expression, connection. The rhythm still comes from your hands, the melody from your voice, the emotion from your soul. AI just gives you the means to share that more clearly with the world.

So don’t fear it. Learn it. Use it. Let it show you what’s possible.

Because the future of music isn’t man or machine — it’s man and machine, working together in perfect harmony.

Referenced Tools & URLs:

-

Waves InTrigger Drum Replacer: https://www.waves.com/plugins/intrigger-drum-replacer

- Slae Digital Trigger 2 Platinum: https://stevenslatedrums.com/trigger-2-platinum/

- UVI Drum Replacer: https://www.uvi.net/drum-replacer.html

-

SoundID VoiceAI by Sonarworks: https://www.sonarworks.com/soundid-produce/voiceai

-

Waves Abbey Road Reel ADT: https://www.muziker.com/waves-abbey-road-reel-adt

-

iZotope Neutron 5: https://www.izotope.com/en/products/neutron.html

-

iZotope Ozone 12: https://www.izotope.com/en/products/ozone.html

-

Waves Clarity VX: https://www.waves.com/plugins/clarity-vx

|  Spotify |  Deezer | Breaker |

Pocket Cast |  Radio Public |  Stitcher |  TuneIn |

IHeart Radio |  Mixcloud |  PlayerFM |  Amazon |

Jiosaavn |  Gaana | Vurbl |  Audius |

Reason.Fm | |||

Find our Podcasts on these outlets

Buy Us a Cup of Coffee!

Join the movement in supporting Making a Scene, the premier independent resource for both emerging musicians and the dedicated fans who champion them.

We showcase this vibrant community that celebrates the raw talent and creative spirit driving the music industry forward. From insightful articles and in-depth interviews to exclusive content and insider tips, Making a Scene empowers artists to thrive and fans to discover their next favorite sound.

Together, let’s amplify the voices of independent musicians and forge unforgettable connections through the power of music

Make a one-time donation

Make a monthly donation

Make a yearly donation

Buy us a cup of Coffee!

Or enter a custom amount

Your contribution is appreciated.

Your contribution is appreciated.

Your contribution is appreciated.

DonateDonate monthlyDonate yearlyYou can donate directly through Paypal!

Subscribe to Our Newsletter

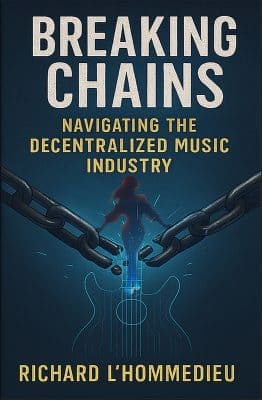

Order the New Book From Making a Scene

Breaking Chains – Navigating the Decentralized Music Industry

Breaking Chains is a groundbreaking guide for independent musicians ready to take control of their careers in the rapidly evolving world of decentralized music. From blockchain-powered royalties to NFTs, DAOs, and smart contracts, this book breaks down complex Web3 concepts into practical strategies that help artists earn more, connect directly with fans, and retain creative freedom. With real-world examples, platform recommendations, and step-by-step guidance, it empowers musicians to bypass traditional gatekeepers and build sustainable careers on their own terms.

More than just a tech manual, Breaking Chains explores the bigger picture—how decentralization can rebuild the music industry’s middle class, strengthen local economies, and transform fans into stakeholders in an artist’s journey. Whether you’re an emerging musician, a veteran indie artist, or a curious fan of the next music revolution, this book is your roadmap to the future of fair, transparent, and community-driven music.

Get your Limited Edition Signed and Numbered (Only 50 copies Available) Free Shipping Included

Discover more from Making A Scene!

Subscribe to get the latest posts sent to your email.